Published on November 4, 2011 | Posted in Seo 101

How to do a quick Seo Audit

During my day to day job I usually have to do basic site seo review. I have written up what processes I usually go through when reviewing a website. Its just a mini how to guide of the process and techniques I go through when doing an seo audit.

-

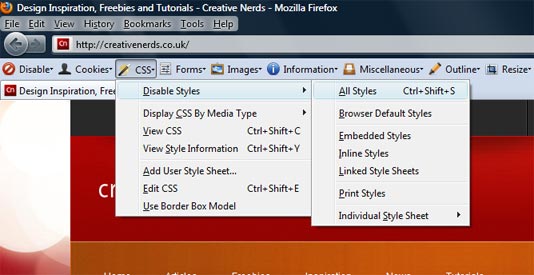

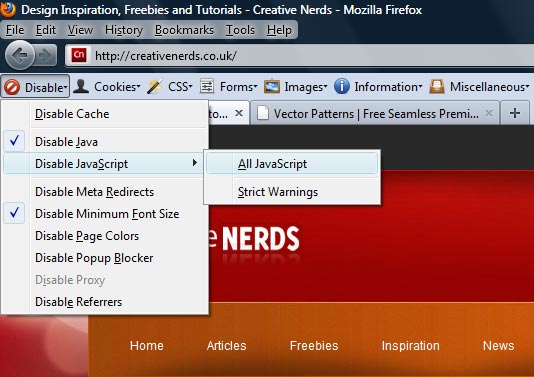

Disable css and Javascript

In Firefox this is straight forward if you have the web developer toolbar installed.

Disable Css

Disable Javascript

Typical things to look out for are:

- Hidden content (Content like Javascript Show/Hide and LightBoxes are fine as these are on the page)

- Hidden links

- Can you still navigate through the site

- Is the site still usable(Doesn’t matter visually but from a navigation point of view)

-

Check the back links

For checking back links I use a excellent tool called open site explorer from SEOmoz. It allows you to find all the backlinks to the website and what is the Page Authority and domain authority of the site. Unless you have a seomoz account, You will only get basic access but is still a good starter for ten.

The Parameters I tend to use are below(This presentation is a couple months old but you can still follow the structure)

-

Review for a social Presence

Have they got an active social presence it is important for a site to have a Facebook and Twitter accounts. If they do, are they updated often? Do they interact with their followers? Do they provide meaningful information to their followers.

-

On site basic

The on site basic are the bread and butter of any seo audit, The core things I usually look out for are:

- Does the site have h1 tags which represent the content on the site

- Do all the pages have unique title tags and meta description are they relevant to the content of the page

- Does the site have any canonical urls and do the point to the correct place

- Do all the images have appropriate alt tags on

-

As the Sitemap and Robot.txt file been setup

The main thing to look for his as the site got a xml sitemap does it include all the pages how often is the sitemap updated. The robot.txt is important to investigate to see if any of the pages have been blocked if they have why.

A final point on xml site map when creating your own site map. To make sure they are verified by not just Google Webmaster tools but Yahoo Sitelinks and Bing Webmaster tools. They may not the lion share of search traffic, but they can gain you easy traffic.

-

Url structure

Does the pages url represent accurately what is on the page. For example an ipod touch could have the link www.mysite.co.uk/mp3-players/ipod-touch this tells the search engines what the page is about.

-

Are they any broken links

A great open source tool called xenu which crawls the site to see if their are any broken links.

-

The Look and Feel of the site

How the site look and feels no matter how well a site performs it has to look and behave well for the user. Does the page flow correctly, what is the colour scheme also what is the typography like.